The idea is 70 years old, but it took decades to make it possible and decades more to make it commonplace

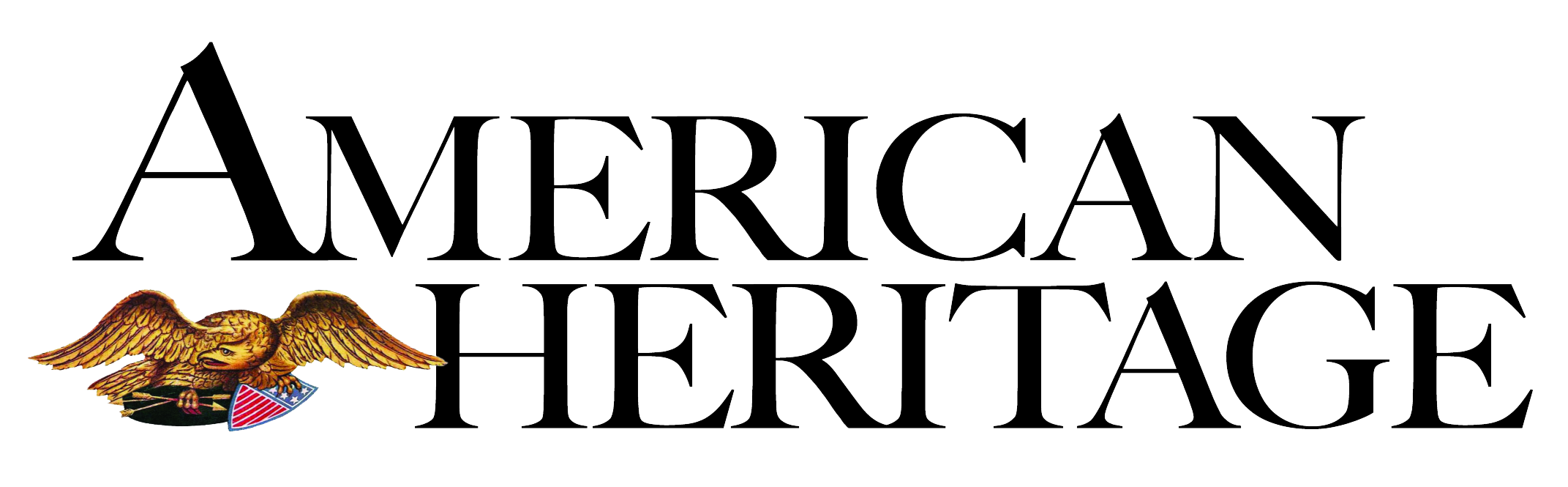

After the telephone was developed in the mid-1870s, and radio at the turn of the century, it was natural to seek ways to combine the two, merging radio’s mobility with the telephone’s person-to-person capability and extensive network. Ship-to-shore radiotelephones were available as early as 1919, and the next decade saw the arrival of two-way radios for police cars, ambulances, and fireboats. These public-safety radios were mobile, but they were limited by the range (usually small) of the transmitter, and they connected only with fellow users, not with every telephone subscriber. Also, like all radio equipment of the day, they were big and clunky; Dick Tracy’s two-way wrist radio existed only in the funny pages.

World War II produced a number of improvements in radio. Tanks in Gen. George S. Patton’s 3rd Army were equipped with crystal-controlled FM radio sets. They were easy to use and provided reliable, static-free conversations while driving over rough terrain. The Handie-Talkie and the backpack walkie-talkie could go anywhere a soldier went. At war’s end, battlefield radio improvements came to civilian equipment. In July 1945 Time magazine said American Telephone & Telegraph (AT&T) was ready to manufacture “a new two-way, auto-to-anywhere radio-telephone for U.S. motorists.”

(Motorola Archives, reproduced with permission from Motorola, Inc.)

AT&T was much more than a telephone company. It performed pioneering work in television, computers, microwave relays, materials research, and a thousand other interests. It was also an irreplaceable defense contractor, helping with projects like the DEW Line, NORAD, and the Nike missile program. By government policy, the company held a near monopoly on telephone communications; otherwise, it was thought, there would be a multiplicity of competing, incompatible systems. AT&T controlled about 80 percent of local American telephone lines, with the rest in the hands of scores of small, local companies. Long-distance service was even more heavily dominated by AT&T. Despite, or perhaps because of, this monopoly, AT&T delivered excellence, building the finest telephone system in the world.

America’s mobile phone age started on June 17, 1946, in St. Louis. Mobile Telephone Service (MTS), as it was called, had been developed by AT&T using Motorola-made radio equipment, and Southwestern Bell, a subsidiary of AT&T, was the first local provider to offer it. These radiotelephones operated from cars or trucks, as would all mobile phones for the next quartercentury. The Monsanto Chemical Company and a building contractor named Henry Perkinson were the first subscribers. Despite having only six channels (later reduced to three), which resulted in constant busy signals, MTS proved very popular in St. Louis and was quickly rolled out in 25 other major cities. Waiting lists developed wherever it went.

No one in 1946 saw mobile telephony as a mass market. The phones were big, expensive, and complicated to use, and callers had little privacy. Worst of all, only a tiny sliver of bandwidth around 150 MHz was available for the spectrum-hungry service. Still, it was a start. MTS would be modified somewhat over the years, but it was basically the way all mobile phones operated until cellular technology came along in the 1980s.

MTS didn’t work like a regular telephone. Instead of picking it up and dialing, you turned the unit on, let it warm up, and then rotated the channel selector knob in search of a clear frequency. If you didn’t hear anyone on, say, Channel 3, you pushed the talk button on your microphone and called the operator. (If you did hear someone talking, you could listen in on the conversation, though you weren’t supposed to.) Then you gave the operator your mobile telephone number and the number you wanted to call. The operator, sitting at a cord-and-jack switchboard, dialed the number and connected your party to the line. With MTS you could either talk or listen, but not both at once, so callers could not interrupt each other. (Simultaneous talking and listening, a later innovation, requires the use of two frequencies; this is known as full-duplex mode.)

Three minutes on an MTS network in the late 1940s cost about 35 cents, the equivalent of $3.50 today. Toll charges could also apply, and then there was a whopping monthly service charge of $15.00. If you made one three-minute call per day on your car phone, the cost per call would work out to about $8.00 in today’s money, exclusive of toll charges.

Moreover, radiotelephones were big and power-hungry. The user’s transmitter and receiver filled the trunk and weighed 80 pounds between them. Their vacuum tubes ran hot enough to bake bread. A car’s headlights dimmed while transmitting, and using the radio without running the engine would kill the car battery.

Who would put up with all these problems? Plenty of people, judging by the waiting lists. Never mind the trouble or expense; the need to communicate was vital then, just as it is today, and there was no other way to connect to the telephone system when you were mobile.

That was early radiotelephony from a customer’s point of view. On the network side, MTS posed a different set of problems. It used a “high tower, high power” approach. In this brute-force method, the tallest possible tower was built, and the maximum allowable amount of power was blasted through it. A signal of 250 watts or more could reach any mobile within dozens of miles, but it also monopolized the use of a given channel throughout the area. As remains true today, too few channels existed for them to be used so inefficiently.

The severe shortage of channels had several causes. First of all, many types of radio technology were competing for a limited amount of bandwidth, including commercial radio and television broadcasting, hobbyists, emergency services, airplane navigation, and numerous others. To make things worse, MTS’s inefficient equipment used 60 kHz of bandwidth to send a signal that can now be sent using 10 kHz or less, so within the sliver of bandwidth it was granted, it could pack only a few channels.

The Federal Communications Commission (FCC) was not about to allot more frequencies to a service that was so expensive and seemed to have such limited application. That’s why, at peak hours in some areas, 40 MTS users might be hovering over their sets, waiting to jump on a channel as soon as it became free. Most mobile-telephone systems couldn’t accommodate more than 250 subscribers without making the crowding unbearable. But even as the first mobile-telephone networks were being installed, there was already talk in the industry about an entirely new radiotelephone idea. Back then it was called a small-zone or small-area system; today we call it cellular.

The Cellular Idea

In December 1947 Donald H. Ring outlined the idea in a company memo. The concept was elaborate but elegant. A large city would be divided into neighborhood-size zones called cells or cell sites. Every cell site would have its own antenna/ transceiver unit. (MTS, by contrast, had a single large transmitter near the middle of the coverage area and a few smaller receivers—sometimes only one, in smaller cities—scattered through the rest of it.) This antenna/transceiver unit would use a “low tower, low power” approach to send and receive calls to mobiles within its cell.

(Courtesy of AT&T Archives and History Center, Warren, N.J.)

To avoid interference, every cell site would use a different set of frequencies from neighboring cells. But a given set of frequencies could be reused in many cells within the coverage area, as long as they did not border each other. This approach reduced power consumption, made it easier to expand service into new areas by adding cells, and eliminated the problem of signals getting weak toward the edges of the coverage area.

So why wasn’t cellular service introduced in the 1940s or 1950s? The biggest answer is the lack of computing power. When Ring wrote his paper, the mainframe computer age, which now seems as remote as the Pleistocene epoch, was still in the future. Computing would be indispensable in the development of cell phones because cellular technology requires complicated switching protocols that were far beyond the capabilities even of AT&T, the world leader in switching.

Here’s why: When a mobile phone moves from one cell into another, two things have to happen. The call has to be transferred from one transceiver to another and it has to be switched from one frequency to another, in both cases without interruption. Keeping a call connected while switching channels is quite a feat, as is choosing the proper channel to switch to, particularly when a network has thousands of customers and dozens of cell sites to manage all at once. This sort of rapid switching is something that only high-speed computers can do. In an age when room-size mainframes had less computing power than the typical hand-held device of today, Ring’s plan remained firmly in the idea stage.

All of this shows how, until recently, mobile phones were never a leading technology, one that inspires innovation in other fields. Instead, they had to wait for advances in electronics to come along, then adopt them: circuit boards, chips, better batteries, microprocessors, and so on. Only in the last 10 to 15 years has there been enough demand that mobile telephony can be a creator instead of a user of basic innovations.

But in the same month, December 1947, that Ring wrote his farsighted memo, another group of Bell Labs scientists came up with a fundamental building block of electronics, perhaps the greatest invention of the twentieth century. It was the transistor, jointly invented by William Shockley, John Bardeen, and Walter Brattain. Compared with tube equipment, transistors promised smaller size, lower power drain, and better reliability. By the mid-1950s you could buy a transistor radio that would fit in a shirt pocket.

This raises another question: Even without cellular technology, couldn’t the bulky car-mounted radiotelephones of MTS have been miniaturized as well? Why were there no portable phones until the 1970s?

The answer is that while transistor radios only had to receive a signal and amplify it a bit, mobile telephones had to send one. In the MTS system, this signal often had to be as strong as 30 watts, since the receiver it was being sent to could be far away. Such a high power level was possible for a car battery, but it would require too many individual batteries for a hand-held phone to be practical or portable. Cellular radio, however, with its large number of transceivers, would reduce a mobile phone’s power-transmitting requirement to less than a watt. It would also operate at higher frequencies, which need less power as long as a line of sight exists between transmitter and receiver. This advantage, along with advances in making batteries smaller, would eventually allow a true walk-around phone.

While waiting for the necessary outside technologies to develop, Bell Labs laid the groundwork for cellular telephony, developing Ring’s conceptual sketch into a complete set of working plans. In this way, AT&T made sure it would be ready when the required computing power and the required spectrum became available. In pursuit of the latter, the Bell System petitioned the FCC in 1958 for 75 MHz of spectrum in the 800 MHz band, enough to accommodate thousands of callers at once. (The Bell System was a familiar name for AT&T; it encompassed the local operating companies, such as Southwestern Bell, as well as Bell Labs and the company’s manufacturing and long-distance subsidiaries.)

This was a tough sell, because the FCC was reluctant to give out large blocks of scarce spectrum space. Any allocation could occur only after a lengthy period of study and then a series of contentious hearings and lawsuits among dozens of competing groups. Radiotelephones were a low priority; they needed lots of bandwidth and seemed likely to benefit only a wealthy few. AT&T promised improvements that would make mobile-phone service cheaper and more efficient, but they were far in the future, and even so, was it anything the world really needed? Regulators were skeptical, so AT&T’s 1958 request languished for more than a decade.

Another problem was that, as always, people in and out of government were suspicious that AT&T would abuse its dominant market position. As Don Kimberlin, a radio historian who spent 35 years with the Bell System, explains, “Since 1948 Ma Bell had eyed the radio spectrum at 900 MHz and beyond for its cellular future. That was why Bell made a strong stab in 1947 to try to get the FCC to simply hand over everything above UHF television to the telcos [i.e., telephone companies]. They really wanted all of it, to use in whatever way they might evolve technology, to rent it to the public. People like television broadcasters, who were already using their own private microwaves, not to mention various utilities and such, did not like the notion of having to rent those from The Phone Company at all!”

Silicon to the Rescue

Meanwhile, another enormous advance in electronics was taking place. In 1958 Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor, working independently, created and improved the integrated circuit, which would develop into what we now know as the silicon chip. Transistors had made electronics smaller, but not small enough: There was a limit to how many you could put on a circuit board before you ran out of room. Noyce and Kilby found ways to miniaturize transistors and other components and squeeze them all onto a tiny piece of silicon. By the end of the 1960s memory-storage chips were being manufactured and sold commercially, and soon afterward came microprocessors, which act like tiny computers. Today a chip smaller than a penny can hold 125 million transistors and contain the entire circuitry of a mobile phone.

As AT&T looked ahead to a cellular future, it continued making modest improvements to its existing MTS service. In 1964 the Bell System, together with independent telephone companies and equipment makers, introduced Improved Mobile Telephone Service (IMTS). This finally allowed direct dialing, automatic channel selection, and duplex channels, and it required less bandwidth for each call. The apparatus was slimmed down to a still-hefty 40 pounds. But upgrading MTS to IMTS was expensive, and with the bandwidth-imposed limits on the number of subscribers, it didn’t always pay. In many areas it was never deployed. IMTS was the last network improvement in the pre-cellular era.

By 1968 the three narrow frequency bands allotted to mobile telephones (35 to 43 MHz, 152 to 158 MHz, and 454 to 459 MHz) had become so overcrowded that the FCC had to act. The commissioners looked again at the 1958 Bell System request for more frequencies, which had been tabled when it was first submitted. Still thinking that mobiles were a luxury, some resisted this investigation. The FCC commissioner Robert E. Lee said that mobile phones were a status symbol and worried that every family might someday believe that its car had to have one. Lee called this a case of people “frivolously using spectrum” simply because they could afford to.

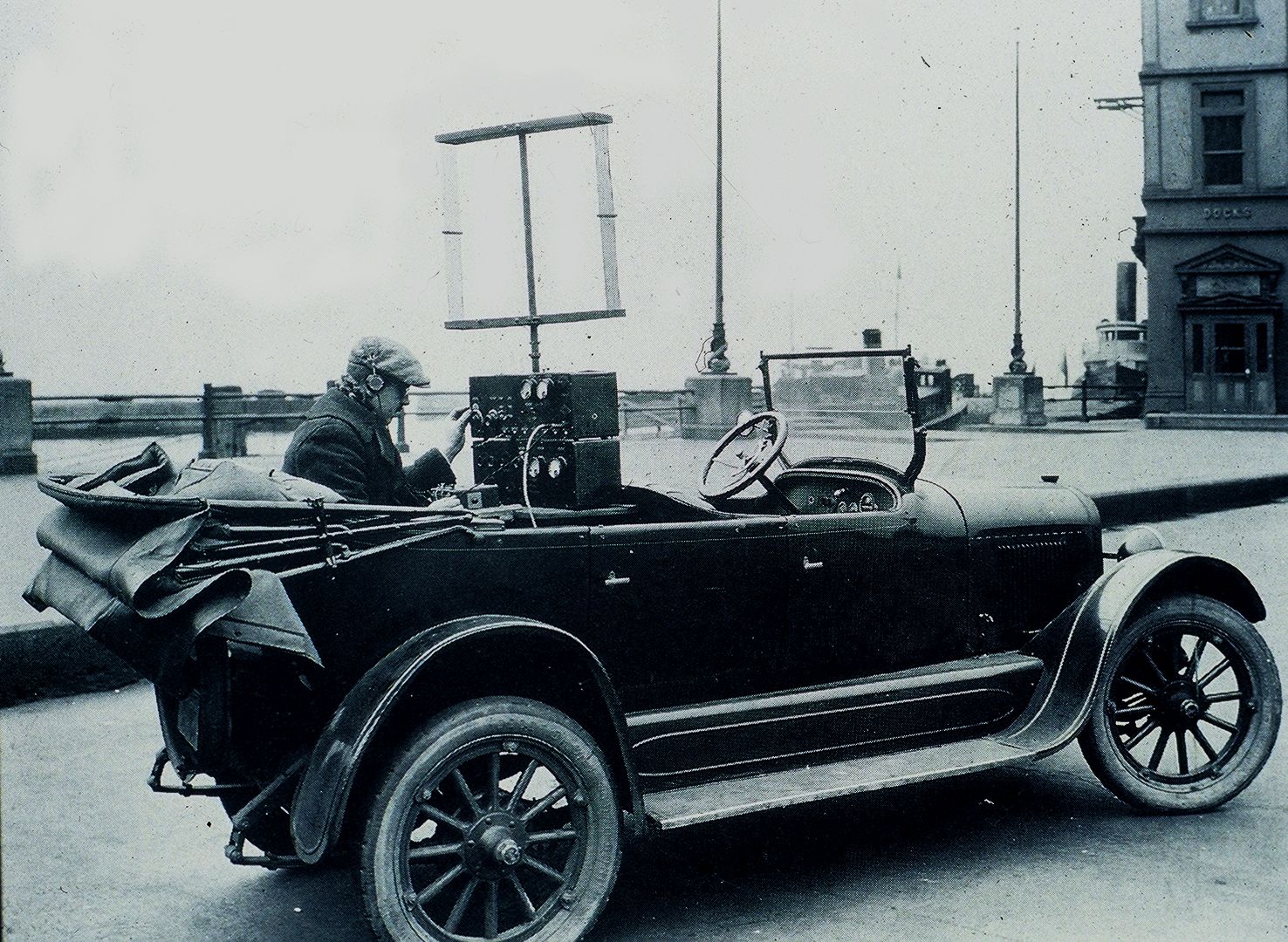

The first mobile phones were installed on Amtrak MetrolinersDespite this opposition, the FCC warmed to mobile phones after the Bell System began operating a cellular system (albeit a one-dimensional one) for the first time in January 1969. A set of public pay phones was installed onboard the Penn Central’s Metroliner, which ran 225 miles between New York City and Washington, D.C. The route was divided into nine zones, or cells, with a different set of frequencies used in each adjacent cell. The U.S. Department of Transportation had requested this service to help promote the high-speed Metroliner, which it hoped would encourage the use of intercity rail.

Six total channels were assigned. When a cell boundary was reached, the train tripped a sensor along the track. That sent a signal to the computerized control center in Philadelphia, which handed off the call to the next cell and switched the frequency. The phones used Touch-Tone dialing, and the Bell Laboratories Record reported that “customers rarely have to wait more than a few minutes for a free channel.” Everyone was pleased with the results, including the newly inaugurated President, Richard Nixon, who rode the inaugural Metroliner run.

John Winward, a radio engineer, installed all the telephones on the Metroliner. He recalls what happened next: “After our success with the Metroliner, the FCC approached AT&T and suggested that spectrum would be made available if the Bell System could show them a workable [car-based] system in two years. That’s when AT&T went all out to make it happen. I was soon loaned to Bell Labs and was a member of the ground team that actually proved in the cellular concept. Much of the equipment we used was hand-built due to a lack of off-the-shelf equipment for the frequencies we were working with… .

“There were seven of us on the field development team. We were up on the roofs in the cold and snow doing all of the grunt work to make the cell phone concept work. It was a lot of fun and fellowship, but no credit for our achievement. It was sort of like winking at a pretty girl in a dark room; we knew we were doing it, but no one else did. Of course, at the time, no one knew just how cellular radio would evolve.”

The field team proved that the idea was feasible. They handed their technical report over to the FCC and then to Motorola in early 1970. The plan was for AT&T to make money from long-distance calls, while Motorola would build the equipment; this arrangement was made for regulatory and antitrust reasons. No one knew that Motorola would soon be a wireless competitor or that the Bell System would eventually be broken up.

With field results in, and after 24 years of research and planning, Bell Telephone Laboratories filed a patent application on December 21, 1970, for a mobile communication system. Cellular radiotelephony was ready, but the FCC was still dragging its feet. Would the hundreds of frequencies needed to make the system work ever become available? With all the new developments in electronics, the competition for spectrum was fiercer than ever, and car phones still didn’t seem like a necessity. But when microprocessor chips arrived on the market (Intel’s 4004, in November 1971, was the first), they made possible a new application, one that would combine with Bell’s cellular system to make an irresistible technology: the hand-held mobile phone.

Situation Well in Hand

The earliest prototype of such a device was built by AT&T’s longtime partner Motorola. (See “Hold the Phone!” in this issue.) At the time, Motorola sold mostly hardware and AT&T sold mostly telephone services; Motorola had been supplying the Bell System with car-based radiotelephones for decades. The relationship was symbiotic, which is why AT&T gave its data to Motorola.

But the looming specter of cellular service made AT&T more of a threat than a friend. Motorola’s main business was dispatch radio systems for taxi companies, utility fleets, and police and fire departments, an industry AT&T had nothing to do with. Motorola built the transmitters, receivers, processors—everything. If cellular was successful, though, dispatch customers might move to the new service, especially since the the Bell System was building its cellular plans around vehicle-based phones.

Even if Motorola got to build some equipment for that service, most of the overall revenues would still go to the Bell System. So Motorola needed its own cellular system to compete with AT&T. And while it had no intention of abandoning the vehicle market, Motorola knew it could make a bigger splash, and perhaps get a better response from the FCC, by introducing a brand-new wrinkle. They needed something, because for many decades the first impulse of regulators had been to extend AT&T’s monopoly (which was considered “natural” in such a technical and capital-intensive field) and let it apply its unmatched engineering prowess undistracted by competition. While no one thought hand-held phones would become widespread, Motorola’s prototype would attract attention and show the FCC that AT&T was not the only cellular game in town.

Everybody underestimated cell-phone demand in the belief that average citizens would neither need nor want it.

On April 3, 1973, Motorola demonstrated the world’s first hand-held cell phone. It made Popular Science’s July 1973 cover. The phone used 14 large-scale integration chips with thousands of components apiece; some of them had been custom-built. No matter that it weighed close to two pounds or that talk life was measured in minutes; a go-anywhere telephone had finally been built. (At one point during the demonstration Motorola’s Martin Cooper accidentally called a wrong number. After a pause he said, “Our new phone can’t eliminate that, computer or not.”) The Popular Science article said that with FCC approval, New York City could have a Motorola cellular system operating by 1976. That approval didn’t come, but Motorola’s demonstration had achieved the desired effect, as the FCC began earnestly studying cellular issues.

In 1974 the FCC set aside some spectrum for future cellular systems. The commission then spent years drafting an endless series of rules and regulations for the cellular industry to follow. Every wireless carrier and manufacturer would have to abide by the FCC’s technical minutiae. Although these restrictions delayed the arrival of cellular technology, there was a great benefit: compatibility. In the early days, at least, American mobiles would work throughout the United States, even on systems built by different manufacturers using different handsets.

AT&T started testing its first complete cellular (but still not hand-held) system in July 1978 in the suburbs of Chicago. The system had 10 cells, each about a mile across (today’s cells are usually three to four miles across), and used 135 custom-designed, Japanese-built car phones. This experimental network proved that a large cellular system could work. But when would the general public get it? The technology was in place, and money was available to finance it; now all AT&T needed was a go-ahead from the FCC, in the form of a spectrum allocation.

A map of cell-phone towers in Texas as of 2005, with different colors representing different companies.

A map of cell-phone towers in Texas as of 2005, with different colors representing different companies.

(www.steelintheair.com)

The FCC continued in its maddeningly deliberate way, but at least it was starting to appreciate the importance of what AT&T had requested. In April 1980 Charles Ferris, chairman of the FCC, told The New York Times, “I believe that, once implemented, cellular’s high quality and spectrum efficiency will revolutionize the marketplace for business and residential communications.” Still, in a docket from that era called “Examining the Technical and Policy Implications of Cellular Service,” an FCC official predicted a delay of four years until commercial service became available. Fortunately the FCC saved time by adopting technical standards drawn up by the Electronic Industries Association. The task of developing specifications could now move more quickly.

Pressure was growing on the FCC to act fast, because when Ferris made his remarks, only 54 radiotelephone channels existed in the entire United States. At least 25,000 people were on waiting lists for a mobile phone; some had been there for as long as 10 years. (Doctors got higher priority, so an improbably high number of applicants claimed to have medical credentials.) New York City had 12 channels and 700 radiotelephone customers, roughly one per 10,000 population. The total number of mobile phones nationwide was about 120,000.

Sorry, You’re Breaking Up

While the FCC was mulling it over, a court decision threw the communications world into upheaval. On August 24, 1982, after seven years of wrangling with the U.S. Justice Department, AT&T agreed to be split apart. Regional telephone companies like New York Telephone and Pacific Bell would be spun off as independent entities, while AT&T would retain Bell Labs, among other interests.

When business analysts and pundits assessed the probable results of the breakup, cellular service barely got a mention. It still had not advanced much from the 1946 version, so it was considered a minor sideline at best. But before AT&T’s reorganization became final, the company would provide a glimpse of the future by making its 36-year-old dream for a high-capacity mobile telephone system a reality.

With its spectrum allocation finally in hand, AT&T began its Advanced Mobile Phone Service (AMPS) in Chicago on October 12, 1983. The research and development costs had been enormous: Since 1969 the Bell System had spent $110 million developing its cellular system. That would be about half a billion in today’s dollars, and it had been spent with no firm idea of how AT&T would recoup its investment. A month later Motorola’s competing but compatible system, Dynatac, debuted in Washington, D.C., and Baltimore. Motorola had probably spent $15 million on cellular R&D. Within three years the two systems would be operating in America’s 90 largest markets.

Nonetheless, when AT&T’s breakup became official, on January 1, 1984, AT&T willingly relinquished the Bell System’s wireless operating licenses to its former regional telephone companies. Why didn’t it fight harder to keep them?

AT&T, along with everyone else, still underestimated the potential of cellular technology. In 1980 the corporation had commissioned McKinsey & Company to forecast cell-phone use by 2000. The consultant’s prediction: 900,000 subscribers. This was off by a factor of about 120; the actual figure was 109 million. AT&T paid dearly for its mistaken giveaway. In 1993, to rejoin the cellular market, it bought Craig McCaw’s patched-together nationwide network, McCaw Cellular, for $12.6 billion.

Other observers made mistakes similar to McKinsey’s. In 1984 Fortune magazine predicted a million users by the end of 1989. The actual figure was 3.5 million. In 1990 Donaldson, Lufkin, Jenrette predicted 56 to 67 million users by 2000. They were off by 40 to 50 million. In 1994 Herschel Shosteck Associates estimated the total for 2004 at between 60 and 90 million. The actual number: 182 million.

Everybody underestimated the demand, mostly because of the persistent belief that average citizens would neither need nor be able to afford cellular service. These mistaken predictions put great pressure on the carriers, which struggled to operate networks not originally designed for such heavy loads. This made dropped calls and persistent busy signals even more common than they are today. As the 1980s came to a close, the capacity crunch forced, or at least accelerated, a transition from analog systems to digital.

Going digital makes telephone transmission more efficient in two ways. First, when a signal is digitized, it can be compressed, requiring less bandwidth. Second, digital signals can be multiplexed, meaning that many signals can be carried on a channel that previously accommodated only one.

CDMA handled several times more calls per channel than TDMA-but that was just the beginning.

Why? Let’s begin with digitizing. Sound is a waveform that travels through air or some other medium. It can easily be converted into a rising and falling voltage; that’s how Alexander Graham Bell built his first telephones in the 1870s. This rising and falling voltage—an analog signal—can be digitized with a device called a vocoder, which samples the signal strength every 20 milliseconds or so, quantifies the results, and converts the figures to binary code. In this way, the voltage has been reduced to a sequence of 1’s and 0’s—a continuously flowing stream of bits.

The vocoder is part of a larger unit called a digital signal processor chip set. It uses various tricks to reduce the number of bits that are transmitted while still keeping your voice recognizable—sort of like using shorthand or omitting letters from words in a text message. Another compression trick is digital speech interpolation. Since 60 percent of a typical conversation is silence, this technique transmits only during voice spurts, letting another signal use the channel during pauses.

These and other methods simplify a voice signal at the cost of degrading its fidelity and intelligibility somewhat. When the compression is modest, it’s barely noticeable, but at high levels it can give callers Donald Duck voices or make them sound as if they’re underwater. Wireless carriers specify the amount of compression in the phones they sell, trying to balance sound quality with the constant need to carry more signals. Some amount of tradeoff between these two is unavoidable. Modern cell-phone service would be impossible without digitalization, but there’s no denying that the old analog mobiles sounded much better.

The second way that digitalization uses spectrum more efficiently is with multiplexing. The earliest digital signals packed three calls on a single channel using a multiplexing technique called time division. In this system, three different calls are interleaved at regular, precise intervals: perhaps 20 milliseconds of call A, then 20 milliseconds of call B, then 20 milliseconds of call C. At the receiving end, the user’s telephone extracts its own part of the signal and ignores the rest.

American cellular providers began switching to digital in January 1989. As a standard the Telecommunications Industry Association chose a technique called time division multiple access (TDMA). This provided digital service while still accommodating analog, though analog phones were being phased out.

Time division was a well-established technology in land-line telephony (a T-1 line uses it), and it made sense to adopt it for digital wireless. But for all its improvements over analog, TDMA had limitations that would become more apparent as cell-phone usage exploded. A few innovators began to ask: Instead of trying to squeeze more efficiency out of time division, why not consider a different approach —one literally from outer space?

A Little More Spectrum, a Lot More Users

At the time, the San Diego firm Qualcomm had a solution looking for a problem. Qualcomm was expert at military satellite communications, and its specialty was called Code Division Multiple Access (CDMA)—what’s often called spread spectrum. CDMA assigns each user a unique code. Then it breaks the user’s signal into pieces, attaches the code and a time stamp to each piece, and sends them over a variety of frequencies. The receiver’s phone grabs the pieces that bear the code it’s looking for and puts them in order using the time data.

TDMA has inherent limitations: It sticks to one frequency per signal and uses a rigid time rotation to multiplex several calls. It does this even when one or more of the signals doesn’t need its full allotment of time. But CDMA uses only as much transmission time as a conversation requires, and it spreads the signal out over many frequencies instead of just one. This greater flexibility lets even more calls be carried per frequency, two to three times as many as TDMA. The improvement is even greater when working with data, which is typically sent in short, intense bursts separated by long periods with little or no change. With CDMA, the bursts can be handled by many channels at once.

But that’s not the biggest advantage CDMA offered. Analog and time-division systems have to use different sets of frequencies in adjacent cell sites, but Qualcomm thought its system could eliminate this restriction. That way a carrier could use every frequency assigned to it in every cell site, greatly increasing the number of calls it could handle. To do this, Qualcomm’s system would have to constantly adjust every mobile phone’s power output, depending on how close it was to the transceiver.

Imagine a crowded cocktail party. The noise level goes up as more and more people enter the room. But if everyone talks just loudly enough to be heard by their listener, the noise level will rise much more slowly and many more people can talk without drowning each other out. Applying this idea to cellular service would, of course, require large amounts of high-speed computing, which was just becoming affordable in the late 1980s.

In 1988 Qualcomm had little more than some good ideas and a few patents. Its founders—Irwin Jacobs, Andrew Viterbi, and Klein Gilhousen—had no actual technology in hand. To make its system work, the company would need brand-new handsets, base-station equipment, chip sets, and software, all designed from scratch and manufactured into a working, practical turnkey system. But Qualcomm had no factories and no cellular experience—unlike AT&T, Motorola, and Ericsson, the three biggest names in cell-phone service at the time.

That didn’t matter to Qualcomm. The company began a crash development program, and by late 1989 it had successfully demonstrated a prototype CDMA cellular system. In the next few years it built CDMA systems overseas while waiting for U.S. approval. The first American installation came in 1995. Like its TDMA rival, CDMA also accommodated existing analog phones, which by that point were getting rarer. Qualcomm’s innovations put the United States back in the technological forefront as far as coding is concerned, though the Europeans and Asians continue to lead in handset innovation.

Despite digitalization, in the early 1990s American cellular telephony was again running out of capacity. The number of subscribers exploded from 2 million in 1988 to more than 16 million in 1993. Better technology would be a great help, but it could never solve the problem by itself. More spectrum had to be licensed, and with the rapid growth in users, the FCC was now willing to do this. The FCC completed auctioning new frequency blocks in January 1997. The availability of hundreds of new frequencies allowed yet another digital system, a European-developed scheme called GSM, to grow and prosper in America.

GSM originally stood for Groupe Spéciale Mobile, after the committee that had designed the specifications (it now stands for Global System for Mobile). In the 1980s Europe had nine incompatible analog radiotelephone systems, making it impossible to drive across the continent and stay connected. Instead of crafting a uniform analog cellular network, telecommunications authorities looked ahead to a digital future. Their system succeeded far beyond Europe, becoming a de facto digital standard for most of the world. GSM, introduced in 1991, now has close to a billion subscribers. GSM is fully digital and incompatible with analog phones.

Today’s American digital cellular systems come in four varieties. Three use time division: TDMA (which is now being phased out), GSM, and Nextel (which is based on Motorola’s proprietary technology, now known as iDEN). Qualcomm’s technology is the only system available in America that uses code division. Verizon and Sprint use CDMA, while Cingular and T-Mobile use GSM. Thanks to Motorola’s foresight in 1973, the FCC requires two competing systems to be available in every market.

Click here to download the United States Frequency Allocations Chart. (1.4MB)

From Voice to Versatility

The latest upheaval in the cell-phone world comes from the larger information revolution. Ten years ago a mobile phone was thought of as simply a phone that was mobile. That notion began to crumble in late 1996 when Nokia, a Finnish firm, introduced the Communicator, a GSM mobile phone that was also a hand-held computer, in Europe. It was later marketed in the United States, with little success. The Communicator marked an important shift in cellular service from emphasizing voice to concentrating on data. It had a tiny keyboard and built-in word-processing and calendar programs. It sent and received faxes and e-mail and could access the Internet in a partial way. The Communicator’s effectiveness was limited, since cellular networks were optimized for voice, not data. Still, it showed where the cell-phone market was heading. Data quickly became the first interest of system designers, though voice remained the essential service for most mobiles.

In the mid-1990s phones went beyond voice...

In the mid-1990s phones went beyond voice...

(Courtesy of Nokia, Inc.)

Cellular phones have gotten much smaller over the years, of course, sometimes merging with other devices, as when cell-phone circuitry was built into laptops and PDAs and instruments like the BlackBerry. The camera has been the mobile’s most significant hardware improvement in the last decade. The first all-in-one camera phone, in 2000, let users take, send, and receive images by e-mail. The phones proved popular immediately, although most people store the images and download them to their PCs instead of sending the bulky files over slow cellular networks. A close second in hardware improvements would be lithium-ion batteries, which have finally allowed users to leave their mobile phones on all day.

On the network end, the most important improvement over the last decade has been the spread of code division, chiefly for the greater capacity it makes possible. While GSM uses time division now, its next iteration will be based on code division. Most companies are looking for ways to build a code-division system without paying royalties to Qualcomm. Yet even with this shift, channel capacity will always remain inadequate. There will never be enough spectrum at peak times for all the bells and whistles a wireless carrier wants to provide and all the customers it wants to serve.

At the beginning of the twenty-first century, cell phones entered a services era, delivering ring tones, image capturing, text messaging, gaming, e-mail, “American Idol” voting, and the start of shaky video. This trend took off when prices dropped enough for the mobile to change from a business tool to one the mass market could afford. By the end of this decade the mobile’s most significant software use will be Voice over Internet Protocol (VoIP). This converts your speech into bits directly at your computer, making the laptop an extremely powerful mobile phone. Plans are also afoot to connect WiMax and WiFi local networks into regional cellular networks, greatly expanding communications.

In 2007 the worldwide total of cell-phone subscribers will exceed two billion. Some countries now have more cell phones than users. As has been true throughout its history, regulatory, technical, and competitive problems remain for mobile telephony. But humanity’s insatiable desire to communicate ensures an imaginative and successful future for the mobile phone. The effects are perhaps most visible in developing countries, where cell phones have made landline service, with its expensive infrastructure, almost unnecessary. In some parts of Africa, cell phones are the driving force in modernizing the region’s economy.

A few final thoughts come from John Winward, who installed the first cellular phones on the Metroliner in 1968. “I really do like knowing that I was a part of cellular development… . I still get a weird feeling when I see people walking around with tiny little telephones dangling everywhere, talking from an aisle in the supermarket. I almost want to walk over and ask what kind of equipment they are using and hope they will let me listen for a few seconds to see what the signalto-noise ratio is. The feeling comes from being able to remember IMTS, and our own mindset about cellular at the time. Boy, were we off the mark!

“There was just no way we could have known what cellular would evolve to and how valuable it was going to be. At the time, there was no such thing as surface mount technology, lithium-ion batteries, and high-density circuitry, so no one could really imagine the meaning of the word small.”

Winward concludes: “Cellular is far from being finished; there are too many great minds working on it now.”

Tom Farley is the founder of privateline.com, a site devoted to telecommunications.